Risking All -- Artificial Intelligence

"Right now, they're not more intelligent than us, as far as I can tell. But I think they soon may be.""Right now, what we're seeing is things like GPT-4 eclipses a person in the amount of general knowledge it has and it eclipses them by a long way. In terms of reasoning, it's not as good, but it does already do simple reasoning.""And given the rate of progress, we expect things to get better quite fast. So we need to worry about that. You can imagine, for example, some bad actor like [Russian President Vladimir] Putin decided to give robots the ability to create their own sub-goals. [Eventually this might] create sub-goals like 'I need to get more power'.""I've come to the conclusion that the kind of intelligence we're developing is very different from the intelligence we have. We're biological systems and these are digital systems. And the big difference is that with digital systems, you have many copies of the same set of weights, the same model of the world.""And all these copies can learn separately but share their knowledge instantly. So it's as if you had 10,000 people and whenever one person learnt something, everybody automatically knew it. And that's how these chatbots can know so much more than any one person.""In the shorter term AI would deliver many more benefits than risks, so I don't think we should stop developing this stuff. [International competition would mean that a pause would be difficult]. Even if everybody in the US stopped developing it, China would just get a big lead.""It is the responsibility of government to ensure AI was developed] with a lot of thought into how to stop it going rogue."Geoffrey Hinton, 75, 'godfather' of Artificial Intelligence

|

The Centre for AI Safety website suggests a number of possible disaster scenarios:

- AIs could be weaponized - for example, drug-discovery tools could be used to build chemical weapons

- AI-generated misinformation could destabilize society and "undermine collective decision-making"

- The power of AI could become increasingly concentrated in fewer and fewer hands, enabling "regimes to enforce narrow values through pervasive surveillance and oppressive censorship"

- Enfeeblement, where humans become dependent on AI "similar to the scenario portrayed in the film Wall-E"

"Advancements in AI will magnify the scale of automated decision-making that is biased, discriminatory, exclusionary or otherwise unfair while also being inscrutable and incontestable.""[They would] drive an exponential increase in the volume and spread of misinformation, thereby fracturing reality and eroding the public trust, and drive further inequality, particularly for those who remain on the wrong side of the digital divide.""[Many AI tools essentially] free ride [on the] whole of human experience to date.""[Many are trained on human-created content, text, art and music they can then imitate - and their creators] have effectively transferred tremendous wealth and power from the public sphere to a small handful of private entities."Oxford's Institute for Ethics in AI senior research associate Elizabeth Renieris"You've seen that recently it was helping paralyzed people to walk, discovering new antibiotics, but we need to make sure this is done in a way that is safe and secure.""Now that's why I met last week with CEOs of major AI companies to discuss what are the guardrails that we need to put in place, what's the type of regulation that should be put in place to keep us safe." "People will be concerned by the reports that AI poses existential risks, like pandemics or nuclear wars.""I want them to be reassured that the government is looking very carefully at this."British Prime Minister Rishi Sunak

|

The welcome screen for the OpenAI ChatGPT app is displayed on a laptop screen Leon Neal/Getty Images |

The chief executives of the world's largest AI laboratories have given public warning that Artificial Intelligence could conceivably at some near future date become as dangerous as a nuclear war breakout. The grim message was delivered by elite executives and scientists that included Sam Altman, chief executive of ChatGPT producer OpenAI, and Demis Hassabis, founder of DeepMind, all of whom signed a statement that urged governments to prioritize on a global scale deep discussions of AI potential on an importance-par with pandemics and nuclear war.

The level of concern and the message being conveyed represents the first time global AI executives collectively assembled as a concerned unit calling for urgent action to address risks to humanity posed by the emerging technology. The statement was designed to "voice concerns about some of advanced AI's most severe risks" within a "broad spectrum of concerns relating to the technology", according to the Center for AI Safety, a non-profit organizer of the statement conveyed in a letter to heads of world governments.

"Mitigating the risk of extinction from AI should be a global priority alongside other societal scale risks such as pandemics and nuclear war", the statement read, posted two weeks ago on the Center for AI Safety website, signed by over 350 executives and researchers, including former Google AI chief Geoffrey Hinton and Dario Amodei, head of AI lab Anthropic.

With the current rise of a new generation of highly capable AI chatbots, concerns surrounding artificial intelligence systems outsmarting humans and running amok have intensified. Around the world countries are scrambling to design regulations for the developing technology; the European Union blazing the trail with its AI Act in the approval stages before the year is out. Open AI, the world's most prominent AI developer, reflects the rapid rise of ChatGPT and has called for an international body modelled on the International Atomic Energy Agency to manage the technology.

"There's a variety of people from all top universities in various different fields who are concerned by this and think that this is a global priority; [many of whom were] sort of silently speaking among each other", noted Dan Hendrycks, executive director of the Center for AI Safety, based in San Francisco. The expectation is that an existential risk from AI could arrive within a decade, with software surpassing human experts in most fields.

|

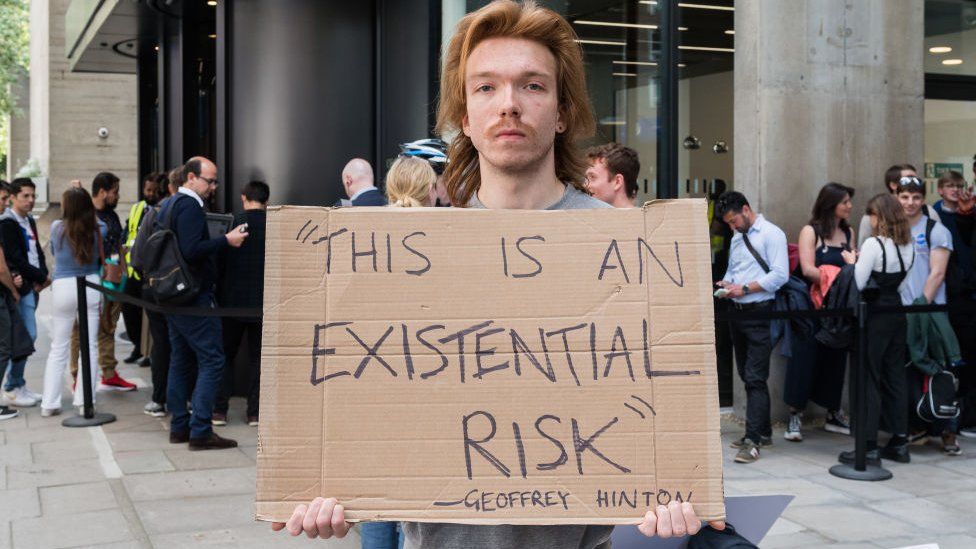

| A protester outside a London event at which Sam Altman spoke Future Publishing/Getty Images |

Labels: AI Warning, Artificial Intelligence, Existential Threat, Global Priority, The Genie out of the Bottle

0 Comments:

Post a Comment

<< Home