The Bleak Hopelessness and Despair of Suicide

"People can have a cognitive shutdown or blank, as any of us do, when we can't remember things during times of extreme stress."

"[Having a three-digit hotline would] facilitate people's access to care at times when they are in dire need."

Madelyn Gould, psychiatrist, Columbia University

|

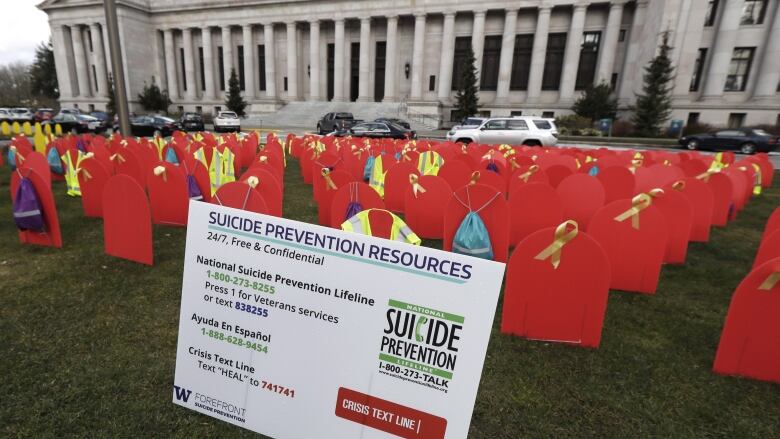

| Suicide prevention phone numbers and red mock tombstones designating some of the more than 1,000 people who took their lives by suicide in Washington state in 2017 are displayed on a grassy area in March 2019, in Olympia, Wash. (Ted S. Warren/Associated Press) |

"People tell you how they feel every day at every moment [on line]. People don't realize that they are putting out these signals. The beauty of AI is that it's all training data. We don't know who anyone is. We're not reading stuff. Everything is just converted to numbers. It's very clinical and non-invasive."

"You can't always prevent suicide, but it is valuable to know who is at risk and who is not at risk."

"The more we're trained to help our friends, the more power we have. Research can't be the magic answer. We still have to interact."

"I'm excited about the next couple of years. We will go from 'We built it' to 'This is what we are going to do with it'."

"In science, you can be on the frontier, or you can be in the application. In psychiatry, there's a big need for application. I think there's room for tools that do something different. It's exciting when you find something that you think is true, and you can build something that hasn't been built before."

Zachary Kaminsky, molecular biologist, researchers, Royal Ottawa Mental Health Centre

|

In the United States the issue of suicides is of such concern that Congress, intending to help in the nation's growing suicide epidemic pushed for a three-digit number for the national suicide prevention hotline, in the hopes of saving lives. Seconds count in responding to people's extreme emotional distress, dialling 911 proved of little help and the current 11-digit U.S. national hotline number eluded desperate people's memory at times of high emotional stress.

Canada is struggling with a similar situation where on a daily basis people feel hopeless enough to commit suicide, at the estimated rate of 11 individuals daily, with 100,000 attempting to kill themselves every year. And so, the 9-8-8 innovation dialing code for the national suicide prevention hotline that went into effect at the turn of the year has attracted the attention of activists attempting to persuade government authorities to follow suit in Canada with its 988 Campaign for Canada.

Now, a researcher with the Royal Ottawa Mental Health Centre, formerly with Johns Hopkins University, has conducted novel research in artificial intelligence and the detection of suicidal ideation. Artificial intelligence capable of assessing millions of social media posts to isolate words or images to send up a red flag identifying thoughts of suicide. Adolescents whose growing incidence of suicide horrifies society, often disclose suicide risk factors on social media rather than speak to family or doctors.

/arc-anglerfish-tgam-prod-tgam.s3.amazonaws.com/public/NTVJBRLXAVJUNNIHOPLCX66BJM.jpg) |

In

June, 2018, food writer Hadley Tomicki is accompanied by his daughter

Kira as he takes a picture of a mural of Anthony Bourdain in Santa

Monica, Calif. The culinary celebrity and documentarian killed himself

on June 8, just a few weeks before his 62nd birthday. Chris Pizzello/The Associated Press

|

People have a tendency to place their most candid thinking and personal information on social media. The question was, how to make use of the information in hopes of preventing suicides. Reading hundreds of thousands of posts represented an impossible task. Which led Dr.Kaminsky to the thought that artificial intelligence could be trained to recognize words and patterns of words and then automatically respond; through an algorithm that might send a suicidal person information on counselling, as an example.

|

| Dr. Zachary Kaminsky Tony Caldwell/Postmedia |

Prevention campaigns in schools or neighbourhoods could be deployed through an AI system used to discover 'hot spots' for suicidal thinking. AI has the potential to isolate the ten percent of the general population thinking of suicide from the 0.5 percent that tend to act on their thoughts. Dr.Kaminsky points out that predictions linked to individuals cannot ever be failsafe; false negatives will always occur.

The researcher's two-year initial study scanned Twitter accounts from English-speakers worldwide. Tests screened for words like 'burden, loneliness, stress, depression, insomnia, anxiety', and 'hopelessness'. Words specifically selected since researchers acknowledged they were related to feelings experienced by suicidal people who often think of themselves as a burden to others, as an example.

Machine learning results in artificial intelligence reaching beyond the initial words to identify patterns and networks of other associated words such as 'love', a commonly-used word among people thinking of suicide, distressed through the breakdown of romantic relationships. Math is used to allocate each Twitter feed with multiple scores, capable of plotting a year's worth of scores rather than a year's worth of tweets, and in the process illustrating patterns of thought.

Some people, noted Dr.Kaminsky, tend to feel better once they tweet about suicide, whereas some people tend to feel worse. A large, sympathetic response from within a social network has the potential to help people feel better about themselves. The algorithm has been tested solely on Twitter but the technique can lend itself for use with other social media, based on images rather than words.

/arc-anglerfish-tgam-prod-tgam.s3.amazonaws.com/public/BYDYECPPSNBLNA4VLB2GKG4UEM.JPG) |

| Suzanne and Raymond Rousson point to a collage of photographs of their son, Sylvain, at their home south of Ottawa. Sylvain killed himself last January. He was 27. |

Labels: AI, Counselling, Mental Health, Research, Suicide

0 Comments:

Post a Comment

<< Home